Introducing Sora and video playground in Azure AI Foundry

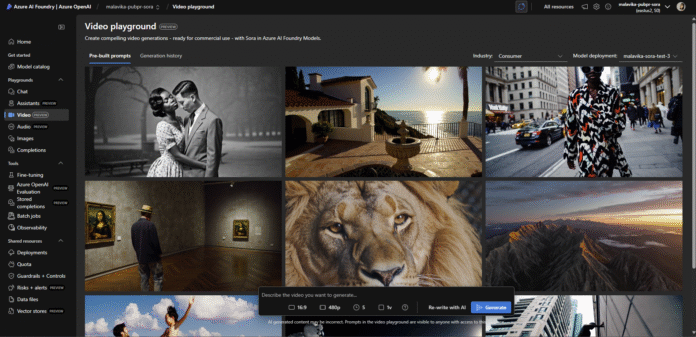

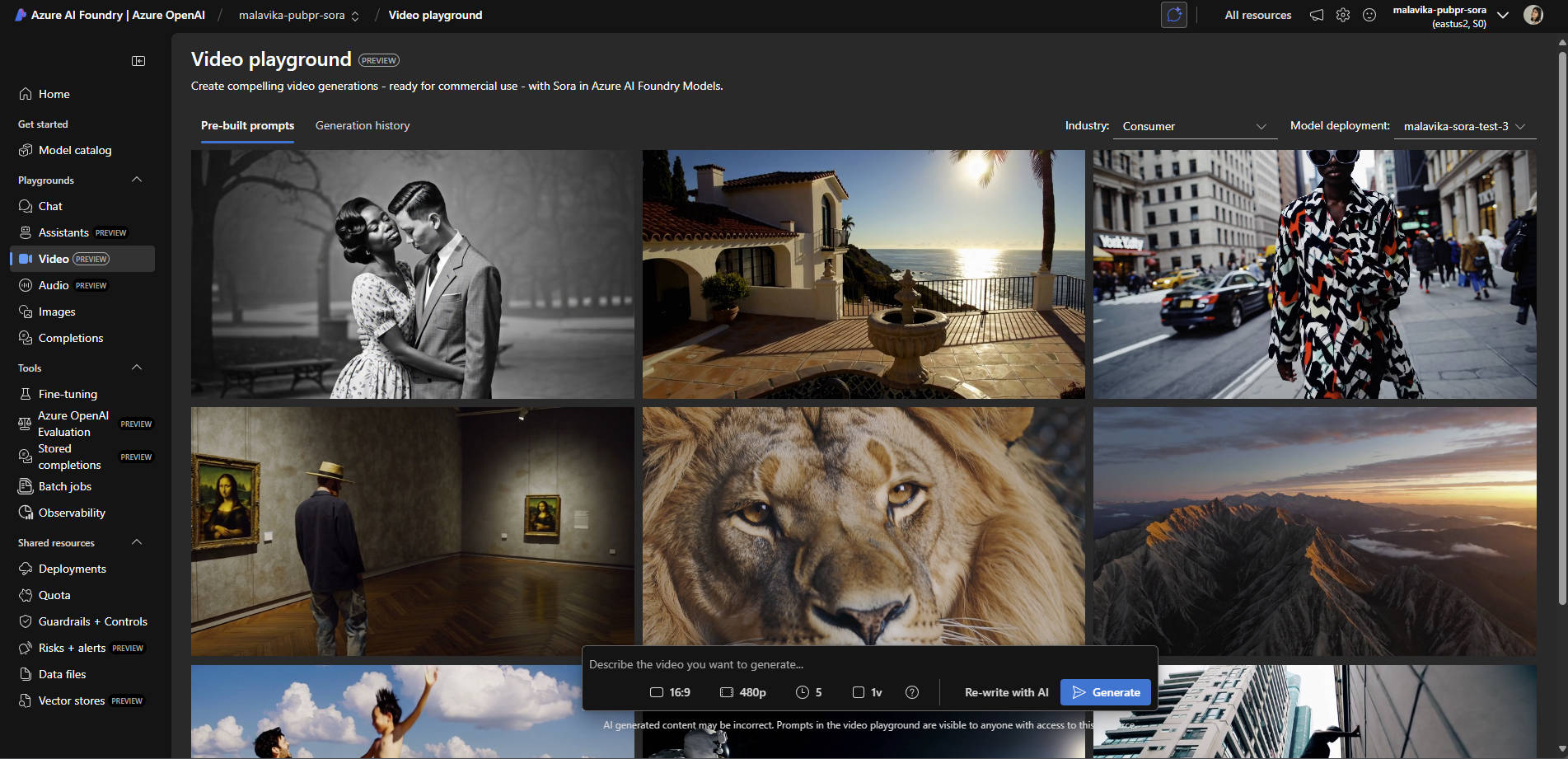

The video playground in Azure AI Foundry is your high-fidelity testbed for prototyping with cutting-edge video era fashions – like Sora from Azure AI Foundry Fashions – prepared for industrial use. Learn our Tech Neighborhood launch weblog on gpt-image-1 and Sora.

Fashionable growth entails working throughout a number of techniques—APIs, providers, SDKs, and knowledge fashions—typically earlier than you’re prepared to totally decide to a framework, write assessments, or spin up infrastructure. Because the complexity of software program ecosystems will increase, the necessity for secure, light-weight environments to validate concepts turns into important. Video playground was constructed to fulfill this want.

Function-built for builders, video playground provides a managed setting to experiment with immediate constructions, consider mannequin consistency relative to immediate adherence, and optimize outputs for trade use instances. Whether or not you’re constructing AI-native video merchandise, instruments, or remodeling your enterprise workflows, video playground enhances your planning and experimentation — so you may iterate sooner, de-risk your workflows, and ship with confidence.

Quickly prototype from immediate to playback to code

Video playground provides an on-demand, low-friction-setup setting designed for speedy prototyping, API exploration and technical validation with video era fashions. Consider video playground as your high-fidelity prototyping setting – constructed that can assist you construct higher, sooner and smarter – with no configuration of localhost, importing clashing dependencies or worrying about compatibility between construct and mannequin.

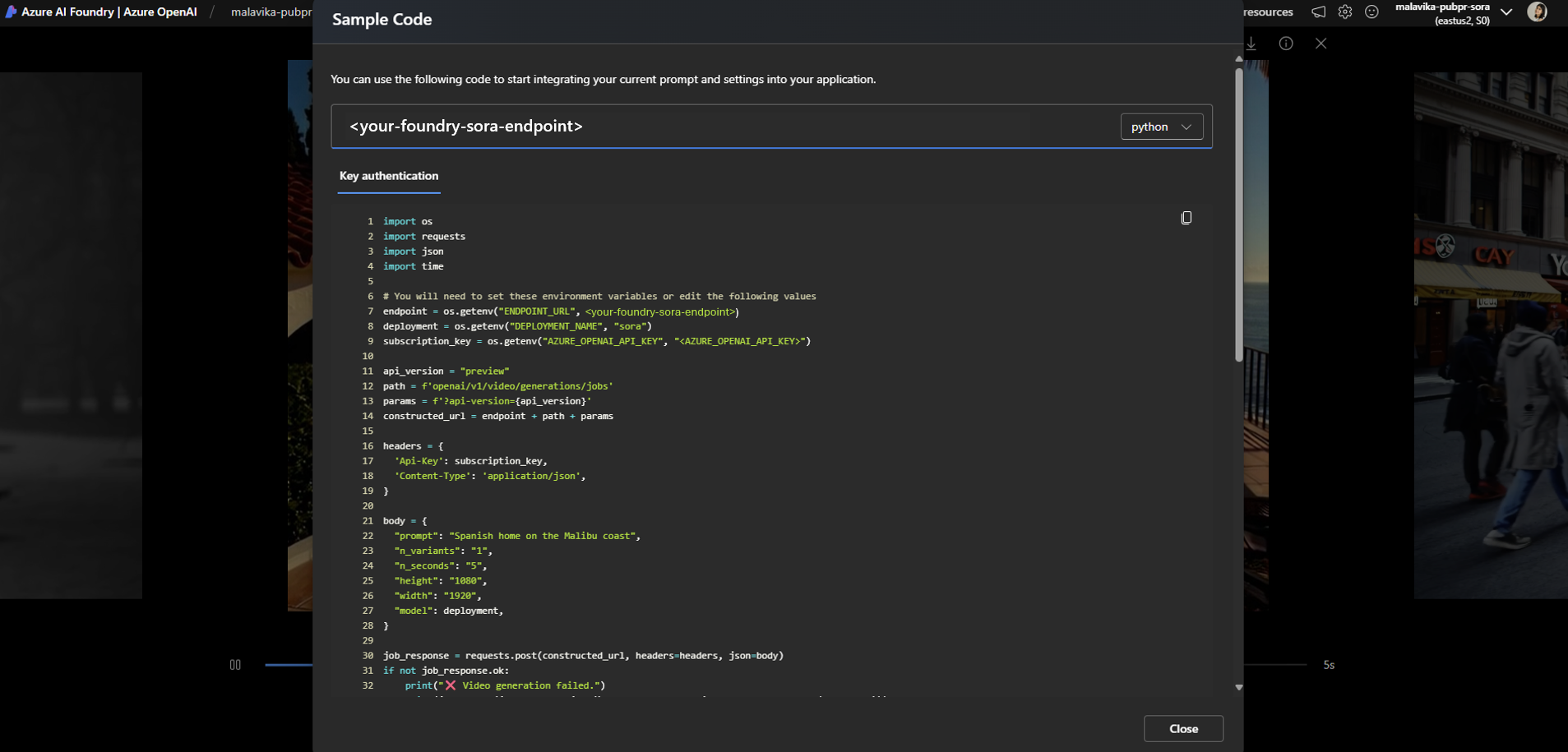

Sora from Azure OpenAI is the primary launch for video playground – with the mannequin coming with its personal API – a novel providing accessible for Azure AI Foundry customers. Utilizing the API in VS Code permits for scaled growth in your VS Code setting to your use case as soon as your preliminary experimentation is completed within the video playground.

- Iterate sooner: Experiment with textual content prompts and modify era controls like facet ratio, decision and length.

- Immediate optimization: Debug, tune and re-write immediate syntax with AI, visually examine outcomes throughout variations you’re testing with, use prebuilt trade prompts, and construct your personal immediate variations accessible within the playground, grounded in mannequin habits.

- Constant interface for API: Every part in video playground mirrors the mannequin API construction, so what works right here interprets instantly into code, with predictability and repeatability.

Options

We constructed video playground for builders who wish to experiment with video era. Video playground is a full featured managed setting for high-fidelity experiments designed for model-specific APIs – and an ideal demo interface to your Chief Product Officer and Engineering VP.

- Mannequin-specific era controls: Regulate key controls (e.g. facet ratio, length, decision) to deeply perceive particular mannequin responsiveness and constraints.

- Pre-built prompts: Get impressed on how you need to use video era fashions like Sora to your use case. Within the pre-built prompts tab, there’s a set of 9 curated movies by Microsoft.

- Port to manufacturing with multi-lingual code samples: Within the case of Sora from Azure OpenAI – this displays the Sora API – a novel providing accessible to Azure AI Foundry customers. Utilizing the “View Code” multi-lingual code samples (Python, JavaScript, GO, cURL) to your video output, prompts and era controls that mirror the API construction. What you create within the video playground might be simply ported into VS Code so as to proceed scaled growth in VS Code with the API.

- Facet-by-side observations in grid view: Visually observe outputs throughout immediate tweaks or parameter modifications.

- Azure AI Content material Security integration: With all mannequin endpoints built-in with Azure AI Content material Security, dangerous and unsafe movies are filtered.

See a demo of those options and Sora in video playground in our devoted breakout session at Microsoft Construct 2025 right here.

No want to seek out, construct or configure a customized UI to localhost for video era, hope that it’s going to robotically work for the subsequent state-of-the-art mannequin, or spend time resolving cascading construct errors as a result of packages or code modifications required for brand new fashions. The video playground in Azure AI Foundry provides you version-aware entry. Construct with the most recent fashions with API updates surfaced in a constant UI.

What to check for in video playground

When utilizing video playground, as you propose your manufacturing workload, take into account the next as you’re visually assessing your generations:

- Immediate-to-Movement Translation

- Does the video mannequin interpret my immediate in a means that makes logical and temporal sense?

- Is movement coherent with the described motion or scene? How may I exploit Re-write with AI to enhance my immediate?

- Body Consistency

- Do characters, objects, and kinds stay constant throughout frames?

- Are there visible artifacts, jitter, or unnatural transitions?

- Scene Management

- How properly can I management scene composition, topic habits, or digital camera angles?

- Can I information scene transitions or background environments?

- Size and Timing

- How do completely different immediate constructions have an effect on video size and pacing?

- Does the video really feel too quick, too sluggish, or too quick?

- Multimodal Enter Integration

- What occurs once I present a reference picture, pose knowledge, or audio enter?

- Can I generate video with lip-sync to a given voiceover?

- Put up-Processing Wants

- What stage of uncooked constancy can I anticipate earlier than I want modifying instruments?

- Do I must upscale, stabilize, or retouch the video earlier than utilizing it in manufacturing?

- Latency & Efficiency

- How lengthy does it take to generate video for various immediate sorts or resolutions?

- What’s the cost-performance tradeoff of producing 5s vs. 15s clips?

Run Sora and different fashions at scale utilizing Azure AI Foundry—no infrastructure wanted. Be taught extra in our latest Microsoft Mechanics video that shares extra concerning the Sora API in motion:

Get began now

- Signal-in or sign-up to Azure AI Foundry.

- Create a Foundry Hub and/or Undertaking.

- Create a mannequin deployment for Azure OpenAI Sora from the Foundry Mannequin Catalog or instantly from video playground.

- Prototype in video playground; iterate over textual content prompts and optimize era controls to your use case.

- Prototype performed? Swap to scaled growth in VS Code with the Sora from Azure OpenAI API.

Create with Azure AI Foundry

- Learn our Tech Neighborhood weblog on gpt-image-1 and Sora.

- Get began with , and leap instantly into

- Obtain the

- Take the

- Overview the

- Hold the dialog moving into and